Introduction To Decision Tree vs Random Forest

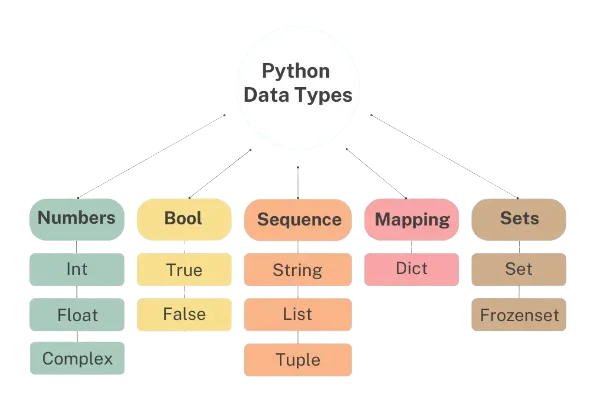

Learn the difference between decision tree vs random forest. Artificial Intelligence has a sub-branch called Machine Learning. It enables a system to learn from its previous experiences and improve. Supervised Machine Learning approaches include decision tree vs random forests. A decision tree is a basic graphic that helps you make decisions.

Multiple algorithms have grown in popularity as a result of recent breakthroughs. The data has been lit on fire by these new and burning algorithms. They aid in the proper processing of data and the making of decisions based on it. Because the globe is undergoing an online craze. Almost anything is accessible over the internet. To make judgments and understand such material, we need to use rigorous algorithms. Now, with such a large number of algorithms to pick from, it’s a complex process. Here you can read, What is Decision Tree in Artificial Intelligence?

The below article outlines the differences between Decision Tree vs Random Forest. A random forest is a form of a continuous classifier that uses a decision tree algorithm in a completely random fashion and in a truly random way, which means it is made up of different decision trees of various sizes and shapes.

When it comes to decision tree vs random forest, a single decision tree is insufficient to obtain the forecast for a much larger dataset. A random forest, but on the other hand, is a combination of decision trees. The output of this decision tree is dependent on the outputs of all of its decision trees.

Decision Tree

Starbucks tests people’s decision-making ability. We must make 7-8 options for one cup of coffee: small, big, sugar-free, strong, moderate, dark, low fat, no fat, amount of calories contained, and so on. That’s exactly how the Decision Tree works.

Among Both Decision Tree vs Random forests, a decision tree is a Supervised Machine Learning Algorithm that may be used to tackle both regression and classification issues. It constructs the model as a tree structure with decision nodes and leaf nodes. A decision node has two or more branches. A decision is represented by a leaf node. The root node is the highest decision node. Decision Trees handle both category and continuous data. When it comes to decision tree vs random forests, we all can agree that decision trees are better in some ways.

Two main types of Decision Trees:

Classification of trees

What we’ve seen so far is an example of a classification tree, with the output being a variable such as ‘fit’ or ‘unfit.’ Categorical is the decision variable in this case.

Regression trees

When it comes to decision tree vs random forest, we’ve defined what a Decision Tree is, let’s look at how it works inside. There are several methods available for constructing Decision Trees, but one of the finest is known as the ID3 Algorithm. Before delving into the ID3 algorithm, let’s first define a few terms. Entropy Shannon entropy is another name for entropy. Entropy, indicated as H(S) for a finite set S, is a measure of data uncertainty or unpredictability.

Also Read: 10 Major Difference between BST and Binary Tree

How does it work?

The decision tree works as

Splitting

When data is entered into the decision tree, it is divided into numerous categories under branches.

Pruning

Pruning also results in the breaking of those branches. It operates as a categorization to better understand the data. It continues to operate as we describe trimming surplus portions.

When the leaf node receives, the pruning process is complete. It’s a crucial component of decision trees.

Selection of trees

You must identify the finest tree that will function well with your data.

Entropy

Entropy must be calculated to determine the comparability of trees. If the probability is zero, it is homogeneous; otherwise, it is not.

Gaining knowledge

When the entropy is reduced, information is obtained. This information aids in further dividing the branches.

- You must compute the entropy.

- Separate the data based on several parameters.

- Select the most relevant information.

The depth of the tree is a significant consideration. The depth tells us of the number of decisions that must be made before we can reach a conclusion of decision tree vs random forest. With decision tree algorithms, lower depth trees perform better.

Advantages of the decision tree:

- Decision trees need less work for data preparation than other methods.

- A decision tree doesn’t need or want data normalization.

- A decision tree doesn’t need any data scalability.

- Missing data in the data have no significant impact on the process of developing a decision tree.

- A decision tree approach is highly natural and simple to communicate to technical teams and stakeholders alike.

Disadvantages of the decision tree:

- A minor change in the data might result in a significant reshaping of the decision tree, resulting in instability.

- When compared to other algorithms. When it comes to decision tree vs random forest, a decision tree’s calculation might be significantly more complex at times.

- The training period for a decision tree is frequently longer.

- Decision tree learning is somewhat costly due to the increased complexity and time required.

- When it comes to decision tree vs random forest, the Decision Tree technique is insufficient for predicting continuous values and performing regression.

Random Forest

It can also be used for training data but has a lot more power. It is extremely popular. The main distinction is that it does not rely on a single choice. It compiles randomly selected decisions based on multiple decisions and then determines the conclusion depending on the majority.

It does not seek the most accurate prediction. Instead, it creates a series of random predictions. As a result, more variety is added, and prediction becomes smoother.

When it comes to decision tree vs random forest, random forest is based on a simple yet powerful idea: the understanding of crowds.

Before we can grasp how the random forest works, we must first understand the ensemble approach. The term “ensemble” refers to the combination of numerous models. As a result, rather than a single model, a group of models is utilized to create predictions.

Bagging is the technique of creating random forests while making decisions in sequence.

1. Bagging

- Take a look at some training data.

- Create a decision tree.

- Repeat the procedure for a set amount of time.

- Now it’s time for the big vote. One who wins is the one you pick.

2. Bootstrapping

Bootstrapping is the process of randomly selecting items from training data. This is a haphazard technique.

Important Qualities Random Forest

- Diversity– Not all attributes/variables/features are taken into account while creating an individual tree; each tree is unique.

- Resistance to the curse of dimensionality– Because each tree does not evaluate all characteristics, the feature space is decreased.

- The parallelization– Each tree is built individually from various data and properties. This means that we can fully utilize the CPU to construct random forests.

- Train-Test split- In a random forest, we don’t need to separate the data for train and test because the decision tree will always miss 30% of the data.

- Stability– Stability results from the fact that the outcome is based on majority voting/averaging.

Random Forest has the following features and benefits:

In or blog of decision tree vs random forest, let’s have a look at the major advantages of the random forest:

- It is one of the most exact learning algorithms available. It creates a very accurate classifier for numerous data sets.

- It works well with huge databases.

- It can handle hundreds of input parameters without variable deletion.

- It provides estimations of which variables are significant in the categorization.

- As the forest grows, it generates an internalized accurate prediction of the generalization error.

- It has an excellent strategy for predicting missing data and retains accuracy even when a considerable part of the data is missing.

The disadvantage of Random Forest

- When it comes to decision tree vs random forest, Random forests are disposed to particular characteristics. occasionally slow

- This approach cannot be utilized for linear algorithms.

- When it comes to decision tree vs random forests, Random forests are worse in the case of high-dimensional data.

Difference Between Decision Tree vs Random Forest

Although a random forest is a set of decision trees, their behavior varies greatly. Let’s have a look at Decision tree vs Random forest major differences:

|

Decision Tree |

Random Forest |

|

|

|

b) When it comes to decision tree vs random forests. It operates at a slow rate. |

|

c) Among the differences between decision tree vs random forest, random forest chooses observations at random, creates a decision tree, and uses the average result. It is not based on any formulas. |

Logistic Regression and Random Forest

Logistic Regression is extensively utilized for tackling industry-scale issues since it is simple to implement and often does not provide discrete output but rather Probabilities associated with each conclusion. The logistic regression approach is resistant to minor random noise and is unaffected by minor incidences of multicollinearity.

In our blog of decision tree vs random forest, let’s compare random forest with other aspects and explore more regarding the same. Random Forest Classifier is an accuracy-focused method that works best when combined with the adequate fit; otherwise, it becomes overfat soon. Individual RFC decision trees with random selection can capture more complicated feature patterns and deliver the greatest accuracy.

When it comes to decision tree vs random forest, Random Forest Classifier shows better performance with categorical data than numeric data, although logistic regression has been a little puzzling with categorical data. If the dataset contains more classified data and outliers, the Random Forest Classifier must be used.

Bagged Decision Tree vs Random Forest

When it comes to Decision Tree vs Random Forest, bagging is based on integration that fits several models on distinct sections of a training sample before combining the predictions of all models.

Between Decision Tree vs Random forest, Random forest is a bagging extension that randomly picks subsets of features evaluated for each data sample. Bagging and random forests both have been shown to be successful on a wide range of predictive analysis issues.

They are effective, however, they are not appropriate for classification tasks with an imbalanced class distribution. Nonetheless, numerous changes to the systems have been proposed that adjust their behavior and make them more suitable for a severe class imbalance.

Classification trees that employ bagging and bootstrap sampling have been found to be more accurate than a single classifier. Bagging is one of the simplest and oldest ensemble-based algorithms, and it may be implemented to tree-based algorithms to improve prediction accuracy. Another improved variant of bagging is the Random Forest method, which is effectively a collection of decision trees developed with a bagging process.

Random Forest Regression

Random Forest Regression is a type of supervised algorithm that does regression using the ensemble learning method. The ensemble learning approach combines predictions from numerous learning algorithms to get a more accurate forecast than a single model.

When it comes to decision tree vs random forest, a Random Forest Regression strategy is appropriate and precise. It often outperforms on a wide range of problems, including those with non-linear connections.

The disadvantages are as follows:

- There is no capture of data.

- overfitting is possible.

- we must pick the number of trees to be included in the model.

Linear regression

Linear regression is one of statistics and machine learning’s most well-known and well-understood algorithms. When you begin to look into linear regression, issues might quickly get perplexing. Although linear regression and for so long (more than 200 years). It has been investigated from every available aspect, and each perspective frequently does have a new and unique name. Linear regression is a linear framework, which assumes a linear connection between the input parameters (x) and a single output variable (y). That is, y may be determined using a linear combination of the input variables (x).

When only one input variable (x) is present, the procedure is known as simple linear regression. When there are several input variables, the procedure is typically referred to as multiple linear regression in the statistical literature.

Isn’t Linear Regression a statistical concept?

Machine learning, more especially predictive modeling, is primarily concerned with minimizing a model’s error or creating the most accurate predictions possible at the sacrifice of explainability. In applied machine learning, we will borrow, reuse, and steal methods from a variety of domains, including statistics, and apply them to these purposes.

Linear regression was established in statistics and is researched as a framework for understanding the connection among input and output numerals, but it has been adopted by machine learning. It is a statistical algorithm as well as a machine learning algorithm.

XGBoost versus Random Forest

In our blog of decision tree vs random forest, before we go into the reasons for or against either of the algorithms, allow us to examine the core idea of both the algorithms briefly.

Gradient boosting is made up of two sub-terms: gradient and boosting. Gradient boosting basically defines boosting as a statistical optimization problem with the goal of minimizing the model’s loss function by inserting weak learners using gradient descent. The learning algorithm is a first-order optimal scheduling procedure for locating a differentiable function’s local minimum. Because gradient boosting is based on minimizing a loss function, multiple loss functions may be utilized, resulting in a versatile approach that can be used for regression, multi-class classification, and other applications.

Gradient boosting has no effect on the sample distribution since weak learners educate on the residual mistakes of a strong classifier (i.e., pseudo-residuals). It lends additional weight to misclassified data by retraining on the model’s residuals. Subjectively, new inadequate learners are introduced to focus on areas where the present learners are underperforming. The output of each weak classifier to the decision tree is predicated on a gradient optimization problem designed to reduce the strong learner’s total error.

“Random Forest is indeed a bagging approach that consists of logistic regression on the low of the dataset obtained and uses the average to continue increasing the predicted quality of that dataset.” So, rather than depending on a single decision tree, When it comes to decision tree vs random forest, it appears that the random forest takes forecasting from each branch and predicts the ultimate output based on the audience vote of predictions. The bigger the number of trees in the forest, the higher the accuracy and the lower the risk of imbalanced datasets.

A Random Forest is composed of two random elements :

- A random subset of characteristics.

- Data Bootstrap Samples.

|

XGBoost |

Random Forest |

|

|

|

b) We cannot guarantee that random forest shall handle the class imbalance accurately |

|

c) Random forests adapt well to distributed processing. |

Conclusion

When it comes to Decision tree vs random forest, Decision trees are fairly basic. A decision tree is a collection of choices, while a random forest is a collection of decision trees. As a result, it is a lengthy yet sluggish procedure.

In decision tree vs random forest a decision tree, on the other hand, is quick and works well with huge data sets, particularly linear ones. When it comes to decision tree vs random forest, random forest algorithm requires extensive training. When attempting to construct a project, you may require over one model. As a result, the more random woods there are, the longer it takes. It is determined by your needs. If you only have a limited time to work on a model, you must use a decision tree.

Random forests, on the other hand, provide stability and dependable forecasts. Having said that, random forests are indeed a powerful modeling tool that is far more resilient than a single feature. Between Decision tree vs random forest, they combine several decision trees to reduce overfitting and mistakes due to bias and hence produce relevant results.

In practice, machine learning engineers and data scientists frequently employ random forests since they are very accurate and contemporary computers and systems can easily manage massive datasets that were previously inaccessible. This is all you need to know about decision tree vs random forest.

jQuery(document).ready(function($) {

var delay = 100; setTimeout(function() {

$(‘.elementor-tab-title’).removeClass(‘elementor-active’);

$(‘.elementor-tab-content’).css(‘display’, ‘none’); }, delay);

});

Frequently Asked Question’s

1. What are the advantages of random forest?

The Perks of Random Forest are:

- It is capable of performing simultaneous classification and regression tasks.

- A random forest generates accurate forecasts that are simple to understand.

- It is capable of effectively handling huge datasets.

- The random forest method outperforms conventional decision tree algorithms in terms of accuracy in forecasting outcomes.

2. Is random forest better than logistic regression?

Generally, logistic regression outperforms random forest when the number of noise factors is less than or compared to the amount of explanatory variables, and random forest does have a better true and false positive predictive value as the number of parameters in a dataset rises.

3. What are the disadvantages of decision trees?

Decision trees have the disadvantage of being unstable, which means that a slight alteration might result in a big restructuring of the ideal decision tree. They are frequently incorrect. With comparable data, several other predictors outperform this one.

When it comes to decision tree vs random forest. Decision trees assist you in weighing your alternatives. Decision trees are fantastic tools for assisting you in deciding between multiple options. They give a highly efficient structure for laying out alternatives and investigating the potential repercussions of those options.

5. How do you avoid overfitting in decision trees?

There are two methods for preventing overfitting: pre-pruning (creating a tree with fewer branches than was the situation) and post-pruning (generating a tree in full and then eliminating parts of it). Pre-pruning results are offered for either a dimension or a predicted value cutoff.

6. What is the biggest weakness of decision trees compared to logistic regression classifiers?

Decision trees are much more likely to generalize the data because they can break on many distinct combinations of attributes, whereas logistic regression associates just one statistic with each feature.

7. Is decision tree good for regression?

When it comes to decision tree vs random forest, One of the most widely used and effective ways to supervised learning is the Decision Tree. It may be used to tackle either Regression and Classification problems, with the latter being more practical. It’s a tree-structured filter with three different kinds of nodes.

8. Can random forests be used for regression?

When it comes to decision tree vs random forests. Random Forests may also be utilized to solve regression problems. The nonlinear nature of a Random Forest can give it an advantage over regression algorithms, making it an excellent choice.

9. Which type of Modeling are decision trees?

Another of the predictive methodologies used during statistics, data mining, and machine learning is decision tree learning, also known as induction of decision trees.

10. Why are decision tree classifiers so popular?

When it comes to decision tree vs random forest, Decision trees classifiers are popular because decision tree structure does not require any domain expertise or parameter setting, it is suitable for experimental research knowledge discovery. Multidimensional data may well be handled via decision trees.