Introduction to Decision Tree in Artificial Intelligence

Decision Tree in artificial intelligence is the foundation for numerous classical machine learning algorithms like Random woodlands, Bagging, and Boosted Decision Trees. They were first introduced by Leo Breiman, a statistician at the University of California, Berkeley. His idea was to represent data as a tree where each internal knot denotes a test on an attribution ( generally a condition), each branch represents an outgrowth of the test, and each splint knot ( terminal knot) holds a class marker.

Decision trees are now extensively used in numerous operations for prophetic modeling, including both bracket and retrogression. Occasionally decision trees are also referred to as CART, which is short for Bracket and Retrogression Trees. Let’s bandy in-depth how decision trees work, how they are erected from scrape, and how we can apply them in Python.

What is a Decision Tree?

A Decision Tree may be a Supervised learning technique that will be used for both classification and Regression problems, but mostly it’s preferred for solving Classification problems. It’s a tree-structured classifier, where internal nodes represent the features of a knowledge set, branches represent the choice rules and every leaf node represents the result.

A decision tree may be a flowchart-like structure during which each internal node represents a “test” on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the result of the test, and every leaf node represents a category label (decision taken after computing all attributes). Classification rules represent the paths from the root to leaf.

Decision trees are analytical, algorithmic models of machine learning which explain and learn responses from various problems and their possible consequences. As a result, decision trees know the principles of decision-making in specific contexts supported by the available data. The learning process is continuous and supports feedback. This improves the result of learning over time. This kind of learning is named supervised learning. Thus, decision tree models are support tools for supervised learning.

At each knot of a tree, a test is applied which sends the query. Sample down one among the branches of the knot. This continues until the query sample arrives at a terminal or splint knot. Each splint knot is related to a value, an order marker in brackets, or a numeric value in retrogression. The value of the flake knot reached by the query sample is returned as the affair of the tree.

Types of Decision Tree

These models often wont to solve problems depending upon the type of knowledge that needs prediction. There are two types of categories:

- Prediction of continuous variables

- Prediction of categorical variables

Prediction of Continuous Variables: It depends on one or more predictors. For instance, the costs of homes in a neighborhood may depend upon many variables like an address, availability of amenities like a swimming bath, number of rooms, etc.

In this case, the choice tree will predict a house’s price supported by various variable values. The predicted value also will be a variable value.

The decision tree model wont to indicate such values is named endless variable decision tree. Continuous various decision trees solve regression-type problems. In such cases, labeled datasets are wont to predict endless, variable, and numbered output.

Prediction of Categorical Variables: The prediction of categorical variables is additionally supported by other categorical or continuous variables. However, instead of predicting a value, this problem is about classifying a new data set into the available classes of datasets. For example, analyzing a discussion on Facebook to classify text as negative or supportive.

Performing diagnosis for illness supporting a patient’s symptoms is additionally an example of a categorical variable decision tree model. Categorical variable decision trees solve classification-type problems where the output may be a class rather than a worth.

The decision tree has three types of nodes:

- Decision nodes – generally represented by squares

- Chance nodes – generally represented by circles

- End nodes – generally represented by triangles

Before learning more about decision trees let’s make aware of a number of the terminologies.

- Root Nodes: It’s the node present at the start of a choice tree from this node the population starts dividing consistently with various features.

- Decision Nodes: The nodes we get after splitting the basis nodes are called Decision Node

- Leaf Nodes: The nodes where further splitting isn’t possible are called leaf nodes or terminal nodes

- Sub-Tree: A bit like a little portion of a graph is named sub-graph similarly a subsection of this decision tree is named sub-tree.

- Pruning: It is nothing but reducing some nodes to prevent over-fitting.

How to Create a Decision Tree

In this section, we shall talk over the core algorithms describing how decision trees are created. These algorithms are fully dependent on the target variable, still, these vary from the algorithms used for category and retrogression trees.

There are several ways that are used to decide how to resolve the given data. The main thing of decision trees is to make stylish splits between knots which will optimally divide the data into the correct orders. We need to use the right decision rules to do this. The rules are what directly affect the performance of the algorithm.

There are some hypothetical that need to be considered before we get started

In the morning, the whole data is considered as the root, later, we use the algorithms to make a split or divide the root into sub-trees.

The point values are considered to be categorical. However, also they’re separated previously to erect the model If the values are nonstop.

Records are distributed recursively on the basis of trait values.

The ordering of attributes as root or internal knot of the tree is done using a statistical approach.

- Gini Impurity: Still, the division is considered to be pure, If all basics are rightly divided into different classes (an ideal script). The Gini pollutant (pronounced like”genie”) is used to gauge the liability that an anyhow chosen sample would be incorrectly classified by a certain knot. It’s known as a” contamination” measure since it gives us an idea of how the model differs from a pure division.

- Chi- Square: The chi-square system works well if the target variables are categorical like success- failure/ high-low. The core idea of the algorithm is to find the statistical significance of the variations that live between the sun-nodes and the parent knot.

The fine equation of chi-squares is used to calculate, (image of formula)It represents the sum of squares of standardized differences between the ob

served and the hoped frequency of the target variable. Chi-squares one other main advantage of using is, it can perform multiple splits at a single knot which results in further delicacy and perfection.

Learning Decision Trees from Compliance

Trivial result for assembling decision trees — construct a path to a splint for each sample. Simply memorizing the compliance (not rooting any patterns from the data). Produces a harmonious but overly complex thesis. Unlikely to generalize well to new/ unseen compliance. We should aim to construct the lowest tree that is harmonious with compliance. One way to do this is to recursively test the most important attributes first.

Decision Tree Literacy (DTL) Algorithm

Still, but both positive and negative, If there are no attributes left. samples, it means that these samples have exactly the same point values but different groups. This may be because few of the data can be incorrect, or the elements do not give enough information to describe the situation fully( i.e. we challenge other useful attributes).

The problem is honestly non-deterministic, i.e. given two samples briefing exactly the same conditions, we may make different

opinions.

Algorithms for Decision Tree

Decision trees operate on an algorithmic approach that splits the data set up into individual data points predicated on different criteria. These splits are done with different variables or the different features of the data set. For example, if the aim is to determine whether not a dog or cat is being described by the input features, variables the data is resolved on might be material like “ claws” and “ barks”.

So what algorithms are used to actually resolve the data into branches and leaves?

- Recursive Double Split: There are various styles that can be used to resolve a tree up, but the most common system of splitting is likely a fashion related to “recursive double split”.

When carrying out this system of splitting, the process starts at the root and the number of features in the data set represents the possible number of possible splits. A function is used to determine how important delicacy every possible split will bring, and the split is made using the criteria that sacrifice the least closeness. This procedure is carried out recursively and sub-groups are formed using the same general strategy.

In order to work out the value of the split, a price function is employed. A different cost function is used for retrogression tasks and bracket tasks. The thing about both cost functions is to determine which branches have the most similar response values, or the most homogenous branches. Consider that you simply want test data of a particular class to follow certain paths and this makes intuitive sense. Regarding the cost function for type, the function is as follows.

Gini impurity = 1- Gini

This is the Gini score, and it’s a measure of the effectiveness of a split, based on how numerous cases of different classes are in the groups performing from the split. In other words, it calculates how mixed the groups are after the split. An exceptional split is when all the groups performing from the split correspond only to inputs from one class. If an exceptional split has been created the “ pk” value will be either 0 or 1 and G will be equal to zero. You may be able to guess that the worst-case split is one where there’s a 50-50 representation of the classes in the split, in the case of double type.

The splitting procedure is terminated when all the data points have been turned into leaves and classified. Still, you may want to stop the growth of the tree beforehand. Large complex trees are prone to befitting, but several different styles can be used to combat this. One system of reducing overfitting is to specify a minimum number of data points that will be used to produce a flake. Another system of controlling for over-fitting is confining the tree to a certain maximum depth, which controls how long a path can stretch from the root to a flake.

- Pruning: Another procedure involved in the creation of decision trees is pruning. Pruning can help increase the performance of a decision tree by stripping out branches containing features that have little prophetic power/ little significance for the model. In this way, the complexity of the tree is reduced, it becomes less likely to overfit, and the prophetic service of the model is increased.

When controlling pruning, the procedure can start at either the top of the tree or the bottom of the tree. Still, the easiest system of pruning is to start with the leaves and attempt to drop the knot that contains the most common class within that leaf. However, also the change is saved If the delicacy of the model doesn’t deteriorate when this is done. There are other ways used to carry out pruning, but the system described above – reduced error pruning – is presumably the most common system of decision tree pruning.

As the name suggests, the decision tree algorithm is in the form of a tree-similar structure. Yet, it’s reversed. A decision tree starts from the root or the top decision knot that classifies data sets predicated on the values of exactly chosen attributes.

The root-knot represents the entire data set. This is where the first step in the algorithm selects the swish predictor variable. It makes a decision knot. It also classifies the whole data set into various classes or lower datasets.

The set of criteria for opting attributes is called Attribute Selection Measures (ASM). ASM is grounded on selection measures, including information gain, entropy, Gini indicator, Gain rate, and so on. These attributes, also called features, produce decision rules that help in branching. The branching process splits the root-knot into sub-nodes, unyoking further into further sub-nodes until leaf bumps are formed. Leaf nodes can not be divided more.

Some Advantages of Decision Trees are:

- Simple to understand and to interpret. Trees can be imaged.

- Requires little data medication. Other methods frequently bear data normalization, dummy variables need to be created and blank values to be removed. Note still that this module doesn’t support missing values.

- The value of using the tree is logarithmic in the number of data points used to train the decision tree.

- Suitable to handle both numerical and categorical data. Other methodologies are generally specialized in assaying datasets that have only one type of variable. See algorithms for further information.

- Suitable to handle multi-output problems.

- Uses a white-box model. However, the explanation for the condition is fluently explained by boolean sense, If a given situation is observable in a model. By discrepancy, in a black-box model (e.g., in an artificial neural network), results may be more delicate to interpret.

- Possible to validate a model using statistical tests. That makes it possible to regard the trustability of the model.

- Performs well indeed if its hypothetical are kindly violated by the true model from which the data were generated.

The Disadvantages of Decision Trees are:

- Decision-tree learners can produce over-complex trees that don’t generalize the data well. T

his is called over befitting. Mechanisms similar to pruning, setting the minimum number of samples needed at a splint knot, or setting the maximum depth of the tree are necessary to avoid this problem. - Decision trees can be unstable because small variations in the data might affect a fully different tree being generated. This problem is eased by using decision trees within an ensemble.

- Prognostications of decision trees are neither smooth nor nonstop, but piecewise constant approximations as seen in the below figure. Thus, they aren’t good at extrapolation.

- The problem of learning an optimal decision tree is known to be NP-complete under several aspects of optimal and indeed for simple generalities. Chronologically, practical decision-tree literacy algorithms are grounded on heuristic algorithms similar to the greedy algorithm where locally optimal opinions are made at each node. Similar algorithms can not guarantee to return the encyclopedic optimal decision tree. This can be eased by training multiple trees in an ensemble learner, where the features and samples are aimlessly tried with relief.

- There are generalities that are hard to learn because decision trees don’t express them fluently, similar to XOR, equality, or multiplexer problems.

- Decision tree learners produce prejudiced trees if some classes dominate. It’s thus recommended to balance the dataset previous to fitting with the decision tree.

Decision Tree in Machine Learning Python:

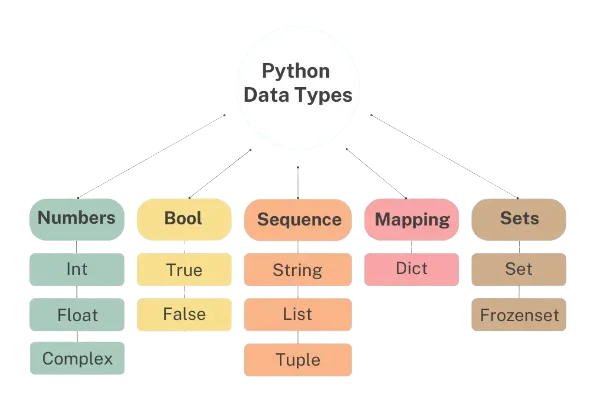

Decision trees are assigned to the information grounded knowledge algorithms which use different measures of information gain for literacy. We can use decision trees for issues where we’ve nonstop but also categorical input and target features.

The main concept of decision trees is to find those descriptive features which cater to the most” information” regarding the target point and also resolve the data set along with the cost of these features similarly so that the target point values for the performing sub-datasets are as pure as possible. The descriptive point which leaves the target point most purely is said to be the most instructional bone. This process of changing the”most instructional” point is done until we negotiate to stop criteria where we also eventually end up in so-called splint bumps.

The splint bumps contain the prognostications we will make for new query cases presented to our trained model. This is possible since the model has kind of learned the beginning structure of the training data and hence can, given some hypotheses, make prognostications about the target point value ( class) of unseen query cases.

A decision tree substantially contains a root-knot, interior bumps, and splint bumps which are also connected by branches.

In the foregoing section, we’ve introduced the information gain as a measure of how well a descriptive attribute is suited to split a data set. To be competent to calculate the information gain, we’ve to first introduce the term entropy of a data set. The entropy of a data set is used to measure the contamination of a data set and we will use this kind of informativeness measure in our mathematics.

There are also other categories of measures that can be used to calculate the information gain. The most prominent bones are the Gini Index, Chi-Square, Information gain rate, Variance. The term entropy (in information proposition) goes back to ClaudeE. Shannon.

The idea behind the entropy is, in simplified terms, Imagine you have a lottery wheel that includes 100 green balls. The set of balls within the lottery wheel can be said to be completely pure because only green balls are included. To express this in the language of entropy, this set of balls has an entropy of 0 (we can also say zero contamination).

Conclusion

Let’s talk about the conclusion as we have read the article on the decision tree in artificial intelligence there were many dimensions of the decision tree in abrupt. Now we can move towards learning the decision tree, how it works and what is the use of the decision tree.

In this blog, we talked over in-depth the Decision Tree algorithm. At last, we have discussed the advantages and disadvantages of using decision trees. There’s still a lot further to learn, and this composition will give you a quick- launch to explore other advanced category algorithms. Decision trees are classic and natural literacy models. They’re grounded on the basic conception of peak and conquer. In the ocean of artificial intelligence, decision trees are used to build literacy machines by educating them on how to determine success and failure. These literacy machines also dissect incoming data and store it.

Frequently Asked Question’s

1. What is a decision tree and example?

A decision tree is an authentically specific type of probability tree that enables you to make a decision about some kind of process. Let’s take a sample, you might want to choose between manufacturing item A or item B, or investing in choice 1, choice 2, or choice 3. It is a supervised learning technique that will be used for both classification and regression problems, but mostly it’s referred to for solving Classification problems.

2. What is the decision tree algorithm?

Decision trees use multiple algorithms to decide to resolve a knot into two or further sub-nodes. The creation of sub-nodes increases the simplicity of attendant sub-nodes. In other words, we can say that the modesty of the knot increases with respect to the target variable.

3. What is the decision tree used for?

Decision Trees are non-parametric supervised literacy systems used for bracket (classification) and retrogression (regression). The aim is to produce a model that predicts the value of a target variable by learning simple decision rules inferred from the data features.

4. What is a decision tree in machine learning with an example?

The tree can be explained by two objects, videlicet decision knots and leaves. The leaves are the opinions or the final outgrowths. And the decision knots are where the data is resolved. An example of a decision tree can be explained using the above double-tree.

5. What are the types of decision trees?

These models often wont to solve problems depending upon the type of knowledge that needs prediction. There are two types of categories:

- Prediction of continuous variables

- Prediction of categorical variables

6. What is decision tree analysis?

Decision tree analysis involves visually outlining the possible issues, costs, and consequences of a complex decision. These trees are particularly helpful for assaying quantitative data and making a decision predicated on figures.

7. What is the advantage of a decision tree?

It has various advantages like Suitable to handle multi-output problems, Suitable to handle both numerical and categorical data. Other methodologies are generally specialized in assaying datasets that have only one type of variable. See algorithms for further information and many more.

8. What are the components of a decision tree?

There are many components available but decision tree has three types of nodes:

- Decision nodes – generally represented by squares

- Chance nodes – generally represented by circles

- End nodes – generally represented by triangles

9. How many decision trees are there?

If I talk about the trending of them then there are four types of decision trees available like ID3(Iterative Dichotomiser3), Classification and Regression Tree also known as CART, Chi-Square, Reduction in Variance.

10. What are the disadvantages of decision trees?

Decision trees can be unstable because small variations in the data might affect a fully different tree being generated. This problem is eased by using decision trees within an ensemble etc.

2 responses to “What is Decision Tree in Artificial Intelligence”

[…] abilities (such as abstract thinking, mental representation, problem-solving, and decision tree), the ability to learn, emotional understanding, creativity, and adaptive adaptability to meet the […]

[…] Multiple algorithms have grown in popularity as a result of recent breakthroughs. The data has been lit on fire by these new and burning algorithms. They aid in the proper processing of data and the making of decisions based on it. Because the globe is undergoing an online craze. Almost anything is accessible over the internet. To make judgments and understand such material, we need to use rigorous algorithms. Now, with such a large number of algorithms to pick from, it’s a complex process. Here you can read, What is Decision Tree in Artificial Intelligence? […]