Introduction to ETL Interview Questions

Extract, Transform, and Load, or ETL in short is a three-phase computing process that involves extracting data, then changing it (cleaning, sanitizing, and scrubbing it), and finally loading it into an output data container. Data can be collected from distinct sources and outputted to a variety of destinations. ETL processing is usually done with software applications, but system operators can also do it manually. ETL application software automates the entire procedure and can be operated manually or on a recurring schedule as individual jobs or as a batch of jobs.

In the 1970s, the ETL process became a popular concept, and it is now widely used in data warehousing. ETL systems frequently integrate data from a variety of applications (systems), which are typically developed and supported by various vendors or hosted on different computer hardware. Other stakeholders frequently manage and operate the separate systems containing the original data. A cost accounting system, for example, might combine data from payroll, sales, and purchasing.

ETL Interview Questions for Freshers

What exactly is ETL? Please explain.

ETL stands for Extraction, Transformation, and Loading. It is a key concept in data warehouse systems. The Data Integration Process consists of three basic steps. Extraction is the procedure to extract data from various data sources such as transactional systems or applications. The term “transformation” is the process of applying conversion rules to data to make it suitable for analytical reporting. The loading process entails moving data into the target system, i.e., the Data Warehouse. The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL basics.

What is ETL Testing?

Nowadays, almost every business depends heavily on data, which is a good thing! We can comprehend more with subjective and accurate data than we can with our human brains. What matters is the timing. Data processing, like any other system, is prone to mistakes. What is the value of data when some of it may be lost, insufficient, or irrelevant?

Here’s where ETL testing comes in. ETL is now regarded as an important aspect of data warehousing architecture in business processes. i.e. ETL extracts data from source systems, transforms it into a consistent data type, and loads it into a single repository.

ETL testing necessitates the validation, evaluation, and qualification of data. After extracting, transforming, and loading the data. We conduct ETL testing to ensure that the final data was imported into the system in the correct format. Before entering your BI (Business Intelligence) reports, it ensures that data reach the destination safely and in good condition. The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL testing.

What are the Benefits of ETL Testing?

Some of the noteworthy benefits that are emphasized when advocating ETL Testing are as follows:

- Ascertain that data is transferred from one system to another in a timely and efficient manner.

- ETL testing can also identify and avoid data quality concerns during ETL operations, like duplicate data or data loss.

- Assures that the ETL process is going smoothly and without interruption.

- Make sure that all data is implemented by client specifications and that the result is accurate.

- Assures that bulk data is securely and completely transferred to the new location.

The interviewer can ask you these ETL Interview Questions to test your knowledge of the benefits of ETL Testing.

What exactly do ETL testing activities incorporate?

ETL testing includes:

- Checking to see if the data is being transformed correctly by the business requirements.

- Ensure that the predicted data is loaded into the data warehouse without truncation or loss of data.

- Ascertain that the ETL program reports erroneous data and substitutes default values.

- To optimize scalability and performance, make sure data loads in the required time frame.

The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL testing basics.

What are distinct Types of ETL testing?

When you start the assessment procedure, you have to determine the appropriate ETL Testing technique. It is vital to make sure that the ETL test is carried out using the best approach and that all stakeholders agree to it. Testing staff members need to be familiar with the steps and this technique involved in testing. Below are several kinds of testing strategies that could be used:

|

Production Validation testing |

It is referred to as “production reconciliation” or “table balancing,” it requires validating data in creation systems and evaluating it alongside the source data. |

|

Source to Target Count Testing |

This makes sure that the number of records loaded into the target is consistent with what is expected. |

|

Source to Target Data Testing |

This involves making sure no data is lost and truncated when loading data into the warehouse, and that the data values are correct after transformation. |

|

Metadata Testing |

The process of figuring out if the source and target systems have the same schema, constraints, indexes, lengths, data types, etc. |

|

Performance Testing |

Validating that data loads into the data warehouse within established timelines to be certain of scalability and speed. |

|

Data Transformation Testing |

This makes sure that data transformations are finished based on different business guidelines and needs. |

|

Data Quality Testing |

This assessment consists of checking out numbers, precision, nulls, dates, etc. Testing consists of both Syntax Tests reporting invalid characters, incorrect upper/lower case order, etc., and Reference Tests to determine whether the data is properly formatted. |

|

Data Integration Testing |

In this particular evaluation, testers make certain the data from a variety of sources have been adequately integrated into the target process, along with validating the threshold values. |

|

Report Testing |

The evaluation examines the data in a summary article, confirming the layout and efficiency, and making computations for consequent analysis. |

The interviewer can ask you these ETL Interview Questions to test your understanding of different ETL Testing.

ETL Interview Questions Intermediate Level

Precisely what are the roles and duties of an ETL tester?

Since ETL testing is really essential, ETL testers are in high demand. ETL testers verify data sources, extract information, put on transformation logic, and load data directly into desired tables. Here are the crucial obligations of an ETL tester:

- In-depth knowledge of ETL tools and procedures.

- Runs thorough testing of the ETL software.

- Examine the data warehouse test component.

- Execute the backend data-driven test.

- Layout and carry out test instances, test harnesses, test plans, etc.

- Identifies problems and also implies the best remedies.

- Review and approve the demands and design requirements.

- Creating SQL queries for examining scenarios.

- Different kinds of tests must be taken out, incorporating major keys, defaults, and also checks of some other ETL-related functionality.

- Conducts regular quality checks.

The interviewer can ask you these ETL Interview Questions to test your understanding of roles of ETL tester.

What exactly are the numerous resources employed in ETL?

- Cognos Decision Stream

- Oracle Warehouse Builder

- Business Objects XI

- SAS business warehouse

- SAS Enterprise ETL server

The interviewer can ask you these ETL Interview Questions to test your understanding of resources in ETL.

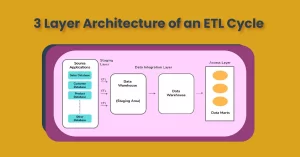

What is the 3 Layer Architecture of an ETL Cycle?

The 3 levels in the ETL are:

|

Staging Layer |

The staging layer is utilized to keep the data that is obtained from the various information source systems. |

|

Data Integration Layer |

The integration layer modifies the data from the staging level and proceeds the data to a database. In the database, the data is set up into hierarchical groups, which is commonly known as dimension, and into specifics and also aggregation facts. The collaboration of facts and also dimension tables in a data warehouse process is referred to as a schema. |

|

Access Layer |

The access layer is utilized by the end-users to retrieve the data for analytical reporting. |

The interviewer can ask you these ETL Interview Questions to test your understanding of architecture of ETL cycle.

How can you define cubes along with OLAP cubes?

The cube is one of the items on which data processing depends greatly. In their simplest form, cubes are only data processing units that consist of dimensions and also fact tables Data warehouse. It offers clients a multidimensional perspective of data, querying, and assessment abilities.

Online Analytical Processing (OLAP) is a software application that enables you to evaluate data from a number of databases at the exact same time. For reporting objectives, an OLAP cube could be employed to store data in multidimensional form. With the cubes, viewing and creating reports turns a lot easier, in addition to smoothing and enhancing the reporting process. The end-users are accountable for dealing with and maintaining these cubes, and also have to manually update their data. The interviewer can ask you these SAS ETL Interview Questions to test your understanding of OLAP cubes.

When do we require the staging area in the ETL procedure?

The staging area is a core area that is on hand between the data sources and data warehouse/data marts systems. It is a place where data is saved temporarily in the process of data integration. In the staging, area data is cleansed and examined for any duplication. The staging area is created to offer many benefits, though the key objective is using the staging area. It’s used to boost efficiency, ensure data integrity, and support data quality operations. The interviewer can ask you these ETL Interview Questions to test your understanding of the staging area in the ETL procedure.

What is the distinction between data warehouse and also data mining?

Data warehousing is a wide notion in comparison to data mining. Data Mining requires removing the concealed info from the data and interpreting it for potential forecasting. In comparison, data warehousing consists of operations for instance analytical reporting to create ad-hoc reports and detailed reports, and info processing to produce interactive dashboards & charts. The interviewer can ask you these SAS ETL Interview Questions to test your understanding of data warehouse and data mining.

What is BI?

Business Intelligence is the procedure for collecting raw business data and changing it right into a significant vision that is a lot more helpful for business. The interviewer can ask you these SAS ETL Interview Questions to test your understanding of BI.

Precisely how can the mapping be fine-tuned in ETL?

Steps for fine-tuning the mapping consist of utilizing the condition for the filter in the source qualifying the data but without the use of a filter, making use of perseverance in addition to the cache store in lookup t/r, making use of the aggregations t/r in sorted i/p group by distinct ports, utilizing operators in expressions rather than capabilities, and increasing the cache size and dedicate interval. The interviewer can ask you these ETL Interview Questions to test your understanding of mapping.

What exactly are the variations between connected and also unconnected lookups within ETL?

Connected lookup is employed for mapping and returns numerous values. It may be plugged into another transformation and in addition, returns a value. Unconnected lookup is used when the lookup isn’t accessible in the principal flow, plus it returns just a single output. Additionally, it can’t be hooked up to the next transformation but is recyclable. The interviewer can ask you these ETL Interview Questions to test your knowledge of lookups within ETL.

Explain what is fact and what is its type?

A crucial component of data warehousing is the fact table. A fact table essentially represents the specifications, metrics, or facts of a business process. In fact tables, facts are saved, and they’re connected to a selection of dimension tables via foreign keys. Facts are usually details and also aggregated measurements of a company process which could be estimated and grouped to deal with the business question. Data schemas just like the star schema or snowflake schema consist of a main truth table surrounded by a number of dimension tables. The actions or numbers such as sales, cost, loss, profit, etc., are some examples of facts.

Fact tables have 2 kinds of columns, foreign keys, and measured columns. Foreign keys keep the foreign keys to dimensions. While measures contain numeric facts. Additional characteristics could be added, based on the company’s needs and necessities.

Types of Facts

Facts could be split into 3 fundamental types, as follows:

- Additive: Facts that are completely additive are the most adaptable and useful. We can sum up additive facts across virtually any dimension related to the fact table.

- semi-additive: We can sum up semi-additive facts across several dimensions linked to the fact table, however, not all.

- non-additive: The Fact table includes non-additive facts, which can’t be summed up for any dimension. The ratio is a great example of a non-additive fact.

The interviewer can ask you these ETL Interview Questions to test your knowledge of facts and their types.

Just how are the tables analyzed in ETL?

Statistics generated by the ANALYZE statement are reused by a cost-based optimizer in order to estimate the most effective strategy for data retrieval. The ANALYZE statement can help support the validation of structures of objects, in addition to space management, in the system. Operations consist of DELETE, ESTIMATE, and COMPUTER. The interviewer can ask you these ETL Interview Questions to test your knowledge of tables. These questions are also among ETL interview questions for data analyst professionals.

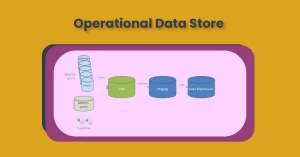

What does one imply by ODS (Operational data store)?

Between the staging area and also the Data Warehouse, ODS serves as a repository for data. After inserting the data in ODS, ODS will load all of the data into the EDW (Enterprise data warehouse). The advantages of ODS typically pertain to company operations, as it provides current, clean data from several sources in one place. Unlike any other database, and ODS database is read-only, and customers can’t update it. The interviewer can ask you these ETL Interview Questions to test your knowledge of ODS. These questions are also among ETL interview questions for data analyst professionals.

Exactly how does the operational data store work?

Aggregated data is loaded into the business data warehouse (EDW) after it’s populated in the operational data store (ODS). Generally, ODS is a semi-data warehouse (DWH) that allows analysts to evaluate the business data. The data persistence time in ODS is generally in the span of 30-45 days and not more. The interviewer can ask you these ETL Interview Questions to test your knowledge of operational data working. These questions are also among ETL interview questions for data analyst professionals.

ETL Interview Questions Advanced Level

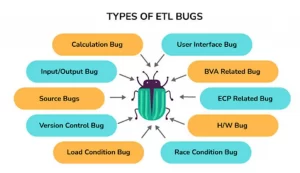

Point out several of the ETL bugs.

Below are a few typical ETL bugs:

|

User Interface Bug |

GUI bugs consist of problems with color selection, spelling check, navigation, font style, etc. |

|

Input/Output Bug |

This kind of bug can cause the application to take invalid values in the position of legitimate ones. |

|

Boundary Value Analysis Bug |

Bugs in that section inspect for both the minimum and maximum values |

|

Calculation bugs |

These bugs are generally mathematical mistakes leading to incorrect outcomes |

|

Load Condition Bugs |

A bug like this doesn’t allow a number of users. The user-accepted data is not allowed |

|

Race Condition Bugs |

This kind of bug interferes with the system’s capability to operate effectively and leads to it crashing or even hanging. |

|

ECP (Equivalence Class Partitioning) Bug |

A bug of this particular sort leads to invalid types. |

|

Version Control Bugs |

Regression Testing is just where these kinds of bugs usually happen and don’t offer version specifics. |

|

Hardware Bugs |

This sort of bug inhibits the device from replying to an application as predicted. |

|

Help Source Bugs |

The help documentation is going to be incorrect as a result of this particular bug. |

The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL bugs. These questions are also among ETL interview questions for data analyst professionals.

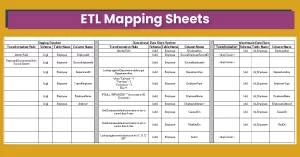

Explain ETL Mapping Sheets

ETL mapping sheets contain all of the essential info from the source file such as all of the rows and columns. This sheet will help the professionals in creating the SQL queries for the ETL tools testing. The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL Mapping Sheets.

Point out a couple of test cases and describe them.

- Mapping Doc Validation: Verifying whether the ETL info is supplied inside the Mapping Doc.

- Data Check: Every element relating to the data including Data check, Number Check, and Null check is analyzed in this specific situation Correctness Issues – Misspelled Data, Inaccurate data, along with null data are tested.

The interviewer can ask you these ETL Interview Questions to test your knowledge of test cases. These questions are also among ETL interview questions for data engineer professionals.

Enumerate a handful of ETL bugs.

Calculation Bug, User Interface Bug, Source Bugs, Load condition bug, ECP connected bug. The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL bugs. These questions are also among ETL interview questions for data engineer professionals.

What Are Distinct Stages Of Data Mining?

A data mining step is a logical procedure for searching vast amounts of data for essential information.

|

Stage 1: Exploration and Data Preparation |

The first step is to investigate and prepare data. The exploration stage aims to identify critical factors and define their nature. |

|

Stage 2: Pattern Identification |

The primary action in this stage is to look for patterns and choose the one that allows you to make the best prediction. |

|

Stage 3: Deployment |

This is the most crucial stage. This stage cannot be reached until a consistent pattern is discovered in stage 2, which is highly predictive. The pattern discovered in stage 2 can be used to determine if the desired effect has been attained or not. |

The interviewer can ask you these ETL Interview Questions to test your knowledge of distinct stages of mining. These questions are also among ETL interview questions for data engineer professionals.

What Are Some of the Issues That Data Mining Can Help With?

Data mining can be applied to various sectors and industries, including product and service marketing, artificial intelligence, and government intelligence. The FBI of the United States employs data mining to filter security and intelligence for illicit and incriminating e-information spread across the internet.

The interviewer can ask you these ETL Interview Questions to test your knowledge of Data Mining. These questions are a

lso among ETL interview questions for data engineer professionals.

What Is Data Purging and How Does It Work?

Data purging is the process to remove data from a data warehouse. Junk data, such as rows containing null values or spaces, is usually cleaned up. Eliminating these types of trash values is known as data cleansing. The interviewer can ask you these ETL Interview Questions to test your knowledge of data purging.

What Is a Bus Schema and How Does It Work?

A BUS schema aims to find standard dimensions across business processes, similar to how conforming dimensions are identified. It has a standardized definition of facts and a standardized dimension. The interviewer can ask you these ETL Interview Questions to test your knowledge of Bus Schema.

What Is The Distinction Between a Lookup and a Joiner?

A joiner is a tool that connects two or more tables to obtain data from them (just like joins in SQL). Lookup is used to compare and check the source and target tables. (It’s similar to a correlated subquery in SQL). The interviewer can ask you these ETL Interview Questions to test your knowledge of lookup and a joiner.

In ETL, how do you define initial load and full load?

|

Initial Load |

This is the first run, during which we process the historical load to the target, and then we must augment the load (i.e., bringing only modified and new records). |

|

Full Load |

When the data source is imported in the warehouse for the first time, the entire data dump occurs. |

The interviewer can ask you these ETL Interview Questions to test your knowledge of initial load and full load. These questions are also among ETL interview questions for 5 years experience professionals.

What role does the ETL system play in the data warehouse?

Removes errors and fills in gaps in data. It gives established data confidence measures. Captures and stores the flow of multinational data. Adjusts data from numerous sources so that it can be combined. Data is organized so that end-user tools can utilize it. The interviewer can ask you these ETL Interview Questions to test your knowledge of ETL system play.

What data formats are used in the ETL system?

Flat files, XML datasets, independent DBMS working tables, normalized entity/relationship (E/R) schemas, and dimensional data models are some of the data formats used in ETL. The interviewer can ask you these ETL Interview Questions to test your knowledge of the ETL system. These questions are also among ETL interview questions for 5 years experience professionals.

In an ETL System, what is Data Profiling?

To enable an ETL system to be built, data profiling is a comprehensive study of a data source’s quality, scope, and context. An immaculate data source that has been carefully maintained before arriving at the data warehouse requires fewer transformations and human intervention to load directly into final dimension tables and fact tables at one extreme. The interviewer can ask you these ETL Interview Questions to test your knowledge of Data Profiling. These questions are also among ETL interview questions for 5 years experience professionals.

What is the purpose of an ETL validator?

ETL Validator is a data testing solution that makes data integration, data warehouse, and data migration projects much easier to test. It extracts, loads, and validates data from databases, flat files, XML, Hadoop, and business intelligence systems using patented ELV architecture. The interviewer can ask you these advanced ETL Interview Questions to test your knowledge of ETL validator. These questions are also among ETL interview questions for 5 years experience professionals.

What is the difference between a mapping, a session, a worklet, and a mapplet?

IMAGE difference between (Mapping, Session, Worklet, Mapplet)

|

Mapping |

Mapping depicts the flow of information from a source to a destination. |

|

Workflow |

It is a set of guidelines that tells the Informatica server how to perform tasks. |

|

Mapplet |

Mapplet is a tool that allows you to configure or create a set of transformations. |

|

Worklet |

A worklet is a graphical representation of a set of tasks. |

|

Session |

A session is a set of instructions that specifies how and when data should be transferred from sources to targets. |

The interviewer can ask you these advanced ETL Interview Questions to test your knowledge of mapping, session, worklet, maplet. These questions are also among ETL interview questions for 5 years experience professionals.

What is the Data Pipeline, and how does it work?

A data pipeline is any processing element that transports data from one system to another. Any application that uses data to provide value can benefit from a Data Pipeline. It can be used to integrate data across applications, develop data-driven web products, and conduct data mining operations. Data engineers build the data pipeline. The interviewer can ask you these advanced ETL Interview Questions to test your knowledge of the data pipeline.

What is Hash Partitioning, and how does it work?

The Informatica server would use a hash function to partition keys to group data among the partitions in Hash partitioning. It’s used to ensure that a group of rows in the same partition have the same partitioning key. The interviewer can ask you these advanced ETL Interview Questions to test your knowledge of hash partitioning. These questions are also among ETL interview questions for 5 years experience professionals.

Note: You Must Know About OBIEE Interview Questions , Excel Interview Questions , iOS Interview Questions

Conclusion

ETL testing has become a popular trend due to abundant employment opportunities and lucrative salary options. ETL Testing is one of the cornerstones of data warehousing and business analytics, with a significant market share. Many software vendors have incorporated ETL testing tools to make this process more organized and straightforward.

Most employers looking for ETL testers are looking for candidates with specific technical skills and experience. No worries; this platform is a fantastic resource for novices and experts. We’ve covered 35+ ETL testing interview questions in this article, ranging from freshers to experienced level questions commonly asked during interviews.

Frequently Asked Question’s

For the last 25 years, Microsoft SQL Server has been used to analyze data. When the data coming from the source systems are inconsistent, the SQL Server ETL (Extraction, Transformation, and Loading) process comes in handy.

In this instance, the first thing you should do is standardize (validate/transform) all of the data coming in before loading it into a data warehouse. ETL has a clear advantage in terms of delivering data that has been cleansed and transformed. Let’s move on to the next FAQ for ETL Interview Questions.

A data mart is a simple data warehouse that focuses on a single subject or business line. Since they do not have to spend time searching through a more complex data warehouse or manually aggregating data from various sources, teams can access data and gain insights faster with a data mart. Let’s move on to the next FAQ for ETL Interview Questions.

The extraction of data from source systems, cleaning, transformation, and loading into the target data warehouse are all part of an ETL workflow. Entity-relationship diagrams and other formal methods for modeling the schema of source systems or databases exist (ERD).

Similarly, standard data models such as star schema and snowflake schema can be used in the destination data warehouse. We have a well-established relational algebra for databases, but no such algebra exists for ETL workflows. ETL workflow models, in contrast to source and target areas, are still in the fancy stage. Let’s move on to the next FAQ for ETL Interview Questions.

The main benefit of using an ELT approach is that you can consolidate all raw data from various sources into a single, unified repository (a single source of truth) and have instant access to all of your data. It enables you to work more freely and makes it simple to store new, unstructured data.

When working with new data, data analysts and engineers save time because they don’t have to develop complex ETL processes before the data is loaded into the warehouse. Let’s move on to the next FAQ for ETL Interview Questions.

5. Which tool is used for ETL?

The following list enumerates the best open source and commercial ETL software systems.

- Xplenty

- Skyvia

- IRI Voracity

- Xtract.io

- Dataddo

- DBConvert Studio By SLOTIX s.r.o.

- Informatica – PowerCenter

- IBM – Infosphere Information Server

- Oracle Data Integrator

- Microsoft – SQL Server Integrated Services (SSIS)

- Ab Initio

- Talend – Talend Open Studio for Data Integration

- CloverDX Data Integration Software

- Pentaho Data Integration

- Apache Nifi

- SAS – Data Integration Studio

- SAP – BusinessObjects Data Integrator

- Oracle Warehouse Builder

- Sybase ETL

- DBSoftlab

- Jasper

Let’s move on to the next FAQ for ETL Interview Questions.

You can use a hybrid data mart to combine data from sources other than a data warehouse. This could be useful in a variety of scenarios, particularly when ad hoc integration is required, such as when a new group or product is added to the organization. Let’s move on to the next FAQ for ETL Interview Questions.

To cleanse your data, follow these five steps. Consider these steps and how they might apply to your corporation. The goal is to get the most out of your data while avoiding errors and inaccuracies.

- Create a data cleansing plan.

- Choose a standard method for entering new data.

- Verify data accuracy and eliminate duplicates.

- Fill in any gaps in your data.

- Develop an automated process moving forward.

Let’s move on to the next FAQ for ETL Interview Questions.

Here’s a stepwise guide to get started with ETL.

- Install an ETL (Extract, Transform, and Load) tool: ETL tools come in distinct shapes and sizes. Choose the alternative which is suitable for you or your company.

- Keep an eye out for tutorials: Tutorials will help you learn about best practices and the most effective ETL tools.

- Register for classes: Classes will provide you with an excellent opportunity to interact with industry experts.

- Read a lot of books: Both beginners and experts can benefit from books that provide relevant ETL information.

- Practice: The further you use the ETL tools at your disposal, the more proficient you will become.

Let’s move on to the next FAQ for ETL Interview Questions.

The entire data dump occurs when a data source is loaded into the warehouse for the first time. At regular intervals, the delta between the target and source data is dumped. Only records added after this date are loaded, so the last extracted data is saved. Let’s move on to the next FAQ for ETL Interview Questions.

10. What is the future of ETL?

Future ETL will provide a data management framework – a holistic and hybrid approach to big data management. ETL solutions will include data governance, data quality, and data security in addition to data integration. To support data-driven business initiatives and imperatives, they will be scalable, versatile, high-performance, and flexible.

One response to “35+ ETL Interview Questions & Answers | DataTrained”

[…] of the basic docker Interview Questions. Containers are pre-configured apps that come with all of the required libraries and configuration files. The OS […]