Introduction to Database Normalization in SQL Server

Assumes you’ve been assigned to oversea your company’s relational database system. You immediately run a few early queries to acquaint yourself with the data, eager to impress. Only to discover the tables in a state of disorder. You come to a halt. You’re concerned that the conflicting dependencies will negatively influence future data manipulation searches and long-term studies.

However, you’re unsure what actions to take to redesign the tables properly. And the unwanted impulse to go through your notes from that database administration class you took a lifetime ago strikes.

What is Database Normalization in SQL Server?

Database normalization in SQL or normalization in SQL server is reorganizing a relational database in line with a set of “normal forms” to eliminate data redundancy and increase data integrity. Edgar F. Codd initially presented it as a component of his relational paradigm.

The term “normalization in SQL” refers to structuring a database’s columns also known as attributes and tables also known as relations such that database integrity requirements correctly impose their dependencies. It’s done through a process of synthesis (forming database design) or deconstruction (developing an established database design) that involves following some formal principles.

Don’t be scared off by these types of definitions. To put it another way, normalization in SQL is a method of establishing a relational database that is as effective as possible. Databases should be arranged in such a way that they reduce duplication and eliminate dependency abnormalities.

In other simplest terms, the structure of your database should be simple. The goal of database normalization in SQL is to streamline data by removing unnecessary information. Data redundancy refers to the presence of several copies of the same information in the same database, dispersed across various places.

The following are some of the disadvantages of data redundancy:-

- Data maintenance becomes time-consuming, and data deletion and upgrades become difficult.

- It leads to discrepancies in data.

- Anomalies in the insert, update, and delete are becoming more common. For example, an update anomaly indicates that all copies of the same record replicated across the database will need to be updated to maintain the record consistently.

- Redundant data expands the volume of a database and consumes excessive disk space.

Also Read:- All 7 Types of Keys In DBMS.

Types of Normalization in SQL Server with Examples

Database normalization in SQL (Also known as normalization in SQL server) is a procedure that should be followed for each database that you create. Normal Forms is the process of taking a database architecture and applying a set of defined criteria and rules to it. The process of database normalization in SQL is further divided into the following types of normalization in SQL:

1. First Normal Form (1 NF)

The problem of data integrity is addressed in this Normal Form. The term atomicity refers to the fact that the table’s values should not be split further. A single cell cannot carry multiple values, to put it simply. The First Normal Form of normalization in SQL is broken when a table has a compound or multi-valued attribute. Let’s understand this with an example:-

|

Student_ID |

Student Name |

Contact Number |

Fee Paid |

|

1SID001 |

Barry |

+91 86245864222 |

50,000 |

|

1SID002 |

Alex |

+91 69874532357 +91 69561485383 |

40,000 |

|

1SID003 |

Sam |

+91 36541298742 +91 48637845236 |

50,000 |

|

1SID004 |

Chris |

+91 96345624863 |

45,000 |

We could observe how the Contact Number field has two values in the table above. As a result, it broke the first NF. When we apply the first NF to the preceding table, we obtain the following table.

|

Student_ID |

Student Name |

Contact Number |

Fee Paid |

|

1SID001 |

Barry |

+91 86245864222 |

50,000 |

|

1SID002 |

Alex |

+91 69874532357 |

40,000 |

|

1SID003 |

Sam |

+91 36541298742 |

50,000 |

|

1SID004 |

Chris |

+91 96345624863 |

45,000 |

As a result, we’ve achieved atomicity, and each column now has its own set of values.

2. Second Normal Form (2 NF)

This set of rules expands on the ones laid out in 1NF:-

- Follow 1NF’s rules to the letter.

- Remove data that isn’t dependent on the primary key of the table, transfer it to a different table, or create a new table and primary key.

- Table associations are identified via foreign keys.

The table must be in the first NF as the first criteria in the 2nd NF of of normalization in SQL. There should be no partial dependencies in the table. A non-prime attribute is determined by the correct subset of primary keys in this case. Take a look at the sample below to get a clear grasp of this context.

|

Student_ID |

Course_ID |

Fee Paid |

|

1SID001 |

CMBA01 |

50,000 |

|

1SID002 |

CBCA01 |

40,000 |

|

1SID003 |

CMBA02 |

50,000 |

|

1SID004 |

CBTECH05 |

45,000 |

The primary keys in this table are Student_ID and Course_ID. Office Location is a non-key property. Office Location is solely dependent on Department ID in this scenario, that is only part of the primary key. As a consequence, the 2NF Form is not satisfied by this table.

To convert this data to 2nf, we must divide it into two halves. This gives us the following tables:

|

Student_ID |

Course_ID |

|

1SID001 |

CMBA01 |

|

1SID002 |

CBCA01 |

|

1SID003 |

CMBA02 |

|

1SID004 |

CBTECH05 |

|

Course_ID |

Fee Paid |

|

CMBA01 |

50,000 |

|

CBCA01 |

40,000 |

|

CMBA02 |

50,000 |

|

CBTECH05 |

45,000 |

As you can see, we’ve eliminated the partial functional dependency we had before. Now, the field Fee Paid in the table is completely reliant on the table’s primary key, which is Course ID

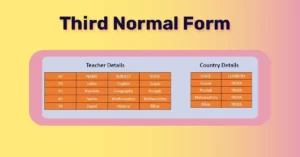

3. Third Normal Form (3 NF)

The same criterion as before applies: the table must be in the second normal form of normalization in SQL before moving on to 3NF. The third stipulation is that non-prime characteristics should not be transitively dependent. Non-prime characteristics (those that do not constitute a candidate key) must not be reliant on all other non-prime variables in a table. A transitive dependence is a functional relationship in which X Z (X determines Z) is determined indirectly by X Y and Y Z (where Y X is not the case). According to the third normal form, fields inside tables that are not dependent on the key should be removed.

- In 2 NF, there is already a table.

- Non-Primary key columns must not rely on non-Primary key columns.

- There is no functional dependence that is transitive.

Take a look at the sample below to get a clear grasp of this context.

|

Student_ID |

Student Name |

Subject_ID |

Subject |

Address |

|

1SID001 |

Barry |

SMG01 |

Management |

Delhi |

|

1SID002 |

Alex |

SCS09 |

SQL |

Banglore |

|

1SID003 |

Sam |

SAC01 |

Accounts |

Hyderabad |

|

1SID004 |

Chris |

SCS05 |

Java |

Kolkata |

Student ID determines Subject ID, and Subject ID decides Subject in the table above. As a result, the Subject is determined by Student ID. This indicates that we have a transitive functional dependency, and this structure doesn’t quite adhere to the third normal form.

In order to reach the 3nf of database normalization in SQL server, we must split the table as follows:

|

Student_ID |

Student Name |

Subject_ID |

Address |

|

1SID001 |

Barry |

SMG01 |

Delhi |

|

1SID002 |

Alex |

SCS09 |

Banglore |

|

1SID003 |

Sam |

SAC01 |

Hyderabad |

|

1SID004 |

Chris |

SCS05 |

Kolkata |

|

Subject_ID |

Subject |

|

SMG01 |

Management |

|

SCS09 |

SQL |

|

SAC01 |

Accounts |

|

SCS05 |

Java |

As seen in the tables above, all non-key characteristics are now fully functioning and solely rely on the main key. The fields Student Name, Subject ID, and Address in the first table are all dependent on Student ID. The subject is only reliant on the Subject ID in the second table.

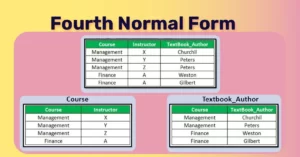

4. Fourth Normal Form ( BCNF or 4 NF)

This is also referred to as 3.5 NF or Boyce Codd Normal Form. It was created by Raymond F. Boyce as a higher version of 3NF to address specific sorts of anomalies that were not addressed by 3NF. The table must fulfill the 3rd Normal Form of normalization in SQL server before moving to BCNF. If every functional dependence A B is true in BCNF, then A must be the table’s Super Key. Consider the table below:

|

Student_ID |

Subject |

Professor |

|

1SID001 |

Management |

Dr.Atul |

|

1SID002 |

SQL |

Dr. Himani |

|

1SID003 |

Accounts |

Dr. Akash |

|

1SID004 |

Java |

Dr. Rajat |

Multiple subjects can be enrolled by a single student. One subject may be taught by numerous professors, and each student is allocated to a professor for that subject. Except for BCNF, all of the standard forms are met in this table. Why?

As you can see, the main key is formed from Student ID and Subject, indicating that the Subject field is a prime characteristic. But, Professor Subject, there is one more reliance. Professor is a non-prime attribute, that is not allowed under BCNF, while Subject is a prime attribute. We will now divide the table structure pieces in order to fulfill the BCNF. Student ID, which already exists, and Professor ID, a newly generated column, will be stored in one table.

|

Student_ID |

Professor_ID |

|

1SID001 < /td> |

1DP01 |

|

1SID002 |

1DP02 |

|

1SID003 |

1DP03 |

|

1SID004 |

1DP04 |

|

Professor_ID |

Subject |

Professor |

|

1DP01 |

Management |

Dr.Atul |

|

1DP02 |

SQL |

Dr. Himani |

|

1DP03 |

Accounts |

Dr. Akash |

|

1DP04 |

Java |

Dr. Rajat |

We have fulfilled the Boyce Codd Normal Form by doing so.

Normalization and Denormalization in SQL server with Examples

Denormalization is a database optimization method in which duplicated data is added to one or more tables. In a relational database, this could assist to avoid costly joins. It’s worth noting that denormalization doesn’t quite imply the absence of normalization. It’s a post-normalization optimization approach.

In a traditional database after normalization in SQL, We store data in distinct logical tables and try to avoid redundant data in a standard normalized database. We may try to keep each piece of data in the database to just one copy.

We might have had a Courses table as well as a Teachers table in a normalized database, for example. The teacherID for a Course would be stored in each item in Courses, but not the teacherName. A join between such two tables would be used to acquire a list of any and all Courses containing the Teacher’s name. In some way, this is beneficial; if a teacher’s name changes, we have only to edit the name once. The disadvantage is that if databases are huge, joins on tables may take an unreasonably long time.

Advantages of Normalization in SQL

Reason why normalization is an appealing option in RDBMS concepts may be shown here.

- Standardization eliminates the copied information, allowing a smaller database to be maintained. As a result, the size of the database is reduced in general.

- Better execution is assured, which is related to the previous point. As the quantity of information bases shrinks, the time it takes to process it becomes faster and more constrained, enhancing response time and efficiency.

- Narrower tables may be possible when standardized tables are altered and feature fewer segments, allowing for more data items per page.

- With fewer files per table, support assignments are completed faster (file modifies).

- Also comprehends the option of just connecting the databases that are necessary.

Disadvantages of Normalization in SQL

More tables to join: As information is dispersed over more tables, the necessity to connect tables grows, making the task more time-consuming. In addition, the database becomes more compelling to recognize.

Tables will include codes rather than true information since rehashed data will be stored as code lines rather than genuine data. As a result, there is always a requirement to visit the query table.

The database becomes progressively sluggish as the common structural type develops. To carry out the standardization cycle efficiently, accurate information on the many conventional structures is required. Unscrupulous usage may result in an abysmal plan full of serious anomalies and data irregularities.

1.How to apply Normalization in SQL

This section will guide you through normalization in SQL tutorial. You can use simple and basic SQL commands to perform database normalization in SQL server. The main objective of normalization in SQL server is to follow the rules of normalization in SQL. Lets’s get it started.

Basic Commands | Normalization in SQL tutorial

|

Commands |

Perform |

|

CREATE DATABASE |

Creates a new database |

|

ALTER DATABASE |

Modifies a database |

|

CREATE TABLE |

Creates a new table |

|

ALTER TABLE |

Modifies a table |

|

DROP TABLE |

Deletes a table |

|

SELECT |

Extracts data from a database |

|

UPDATE |

Updates data in a database |

|

DELETE |

Deletes data from a database |

|

INSERT INTO |

Inserts new data into a database |

|

CREATE INDEX |

Creates an index (search key) |

Related Topic:- Composite Key in Sql

Conclusion

One of the most important responsibilities for the effective installation of a DBMS that fulfills the data needs of a business application to be built is database design. Database normalization in DBMS is a critical procedure that aids in the creation of realistic SQL databases that are both cost-effective and have improved security models. The normalized data process relies heavily on the entire functioning database requirements.

So far, we’ve gone over topics like Normalization in SQL server (1NF, 2NF, and 3NF) and Database Demoralization in relation to Relational Database Systems (RDBMS). Knowing the fundamentals of database normalization in SQL server will help you comprehend relational ideas and the requirement for numerous tables in database design structures. It’s more prevalent in data warehousing settings, where you’ll most likely be working on a denormalization process.

In fact, querying denormalized data is far more efficient than querying normalized data. Thus, the article on SQL Normalization draws to a close. I hope you now have a better understanding of normalization ideas.

Frequently Asked Questions

1. What is Normalization 1NF 2NF 3NF?

If a relation has an atomic value, it is in 1NF. 2NF. If a relation is in 1NF and so all non-key characteristics are fully functioning and dependent on the main key, it is in 2NF. 3NF. If a relation is in 2NF and there is no transition dependence, it is in 3NF.

- A relation violates the first normal form if it has composite or multi-valued attributes, or it is in 1NF if it does not include composite or multi-valued attributes. If every feature in a connection is a just valued attribute, it is said to be in 1NF.

- A relation is 2NF when it has No Partial Dependency, which means that no non-prime attribute of the table is dependent on any suitable subset of any candidate key.

- Values in records that aren’t part of the key aren’t supposed to be in the table. In general, whenever the contents of a collection of columns could apply to even more than one record in a table, consider separating those data into their own table.

For more information, read our article on Database Normalization in SQL Server.

2. What is the purpose of normalization?

Normalization is a method of information that is organized efficiently. The normalization process has two basic goals: to reduce duplicate data by keeping the very same data in several tables, and verify that data relationships make sense by only putting relevant data in the table. Because the same data is duplicated in several locations, data redundancy increases the database’s size needlessly. During insert, remove, and update actions, inconsistency issues might develop. Let’s look at the objective of database normalization in SQL server to understand the purpose of normalization:

- It is used to clean up the relational table of redundant data and database anomalies.

- By assessing new data types utilised in the table, normalisation helps to decrease repetition and complexity.

- It’s a good idea to break down a huge database table into multiple smaller tables and use relationships to connect them.

- It eliminates putting duplicate data or groups into a table.

- It lowers the likelihood of abnormalities in a database.

For more information,read our article on Database Normalization in SQL Server.

An SQL key is a unique field (or attribute) or a set of fields that may be used to distinguish rows (or tuples) in a database in a unique way. SQL keys make sure there aren’t any rows containing duplicate data. They also aid in the establishment of a link between different database tables. There are 7 types of keys in SQL:-

- Primary Key

- Foreign Key

- Candidate Key

- Super Key

- Alternate Key

- Composite Key

- Artificial Key

For more information, read our article on Database Normalization in SQL Server.

4. What is a super key in DBMS?

In a database management system (RDBMS) table, a super key is a set of fields that uniquely identifies any record. A primary key is a similar notion in which the superkey is lowered to the bare minimum of columns necessary to identify each individual row.

The super key’s sole objective is to discover the tuples in the database’s given table. The candidate key is only a component of the super key in this superset. Super keys are all those qualities in a database that can uniquely identify the table’s other attributes. For more information, read our article on Database Normalization in SQL Server.

5. What is an anomaly in DBMS?

A database anomaly is a fault in a database that usually emerges as a result of shoddy design and putting it all in a flat database. In most cases, this is eliminated through the normalization procedure, which involves separating and connecting tables. The sorts of anomalies that cause table inconsistencies, degradation of integrity, and duplicated data are listed below.

-

- Data Redundancy

In a relational database, data redundancy occurs when two or even more rows or columns contain the same or repeating value, causing memory to be used inefficiently. - Insert Anomaly

When some characteristics and metadata are to be added into a relational database without the presence of other attributes, an insert anomaly arises.

- Data Redundancy

- Update Anomaly

When duplicated data is updated just once but not in all places, an anomaly develops. As a result, your database or table is in an irregular condition.

- Delete Anomaly

When certain entries are lost or deleted from a database table as a result of the removal of other records, an anomaly arises.

For more information, read our article on Database Normalization in SQL Server.

6. What are the advantages of normalization?

Better execution is assured, which is related to the previous point. As the quantity of information bases shrinks, the time it takes to process it becomes faster and more constrained, enhancing response time and speed. Data normalization is much more than just rearranging data in the database as data becomes increasingly useful to any form of organization. Here are among the most significant advantages:

- Reduces data duplication

- Ensures data consistency in the database.

- Database architecture that is more flexible and secure

- Execution that is better and faster

- Greater database organization in general

For more information, read our article on Database Normalization in SQL Server.

A join is a SQL action that creates a relation between various or even more database tables by establishing a link between them based on matching columns. In a SQL database management system, join commands are used in the majority of complicated queries.

In a nutshell, In a database management system, a join is a binary action that enables you to combine a join product and a selection in a single statement. The purpose of a join criterion is to aid in the combination of data of two or even more DBMS tables. The primary and foreign keys are used to link tables in a database management system. There are mainly 2 types of join:

- Inner Join

- Outer Join

For more information, read our article on Database Normalization in SQL Server.

The SQL programming language is used to access, manipulate, and communicate with databases. SQL can execute almost any function, including accessing data from a database, building a new database, and altering data and databases, such as insertion, deletion, and updating. It’s an easy-to-use, domain-specific language. SQL is a language for interacting with databases. It is the common language for RDBMS, according to ANSI (American National Standards Institute). SQL queries are also used to accomplish duties like updating data in a database and retrieving data from one.

- It is commonly used in business intelligence software.

- SQL is used for manipulation of data and data testing.

- SQL is heavily used in data science tools. SQL is required by big data tools like Spark and Impala.

- It is among the most demanding industrial skills to acquire.

For more information, read our article on Database Normalization in SQL Server.

The ability to aggregate data from various tables inside a single database is SQL’s biggest feature. In comparison to Python, SQL is easier and has a smaller set of functions. Functions, which are scripts that accomplish specific tasks, are used in SQL queries.

10. What is Normalization in SQL?

The process of structuring data in the database is known as normalization. This includes generating tables and defining links between them according to rules aimed to secure data while also allowing the database to be more flexible by removing duplication and inconsistent dependencies.

Data that is redundant costs disc space and causes maintenance issues. If data is to be altered in more than a location, the data should be modified in the very same way in each location. If data is only saved in the Table and in no other databases, changing a customer’s address is significantly easier. For more information, read our article on Database Normalization in SQL Server.